Kestra is an open-source data orchestrator and scheduler. With Kestra, data workflows, called flows, use the YAML format and are executed by its engine via an API call, the user interface, or a trigger (webhook, schedule, SQL query, Pub/Sub message, …).

The important notions of Kestra are :

The flow: which describes how the data will be orchestrated (the workflow thus). It is a sequence of tasks .

The task: a step in the flow that will perform an action on one or more incoming data, the inputs, and then generate zero or one output data, the output.

The execution: which is an instance of a flow following a trigger.

The trigger: which defines how a flow can be triggered.

To start with Kestra locally, the easiest way is to use the Docker compose provided file, then run it via docker compose up.

Download the Docker Compose file using the following command:

curl -o docker-compose.yml https://raw.githubusercontent.com/kestra-io/kestra/develop/docker-compose.ymlMake sure that Docker is running. Then, use the following command to start Kestra server:

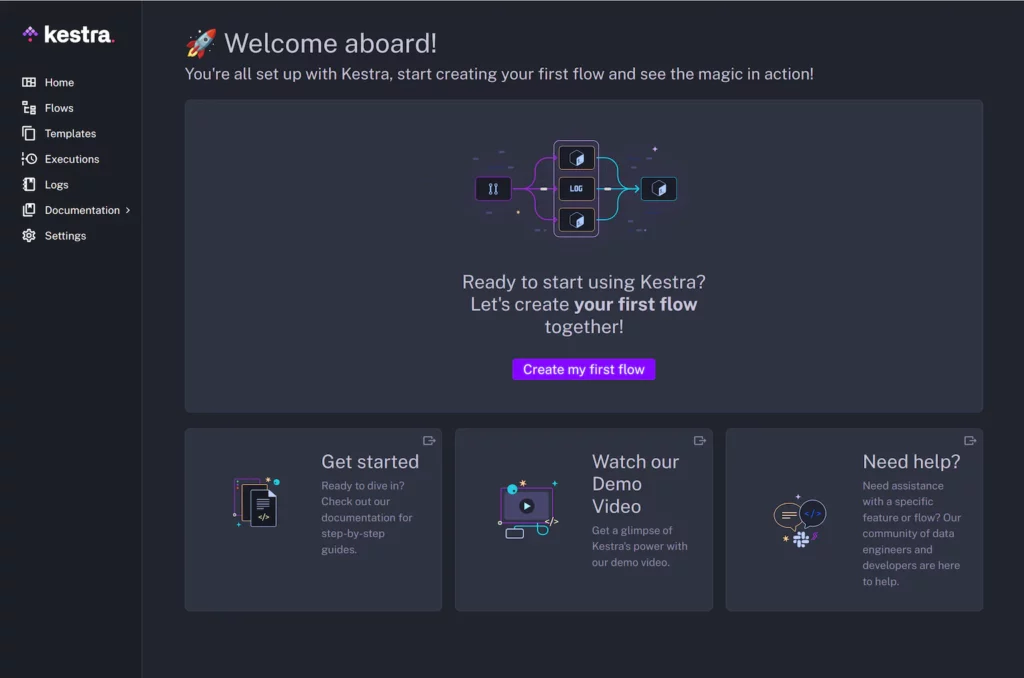

docker compose up -dOpen http://localhost:8080 in your browser to launch the UI.

On the left menu, you will have access to all the functionalities provided by the Kestra UI:

- Home: The Home page contains a dashboard of flow executions.

- Flows: The Flows page allows flow management and execution.

- Templates: The Templates page allows flow template management.

- Executions: The Executions page allows flow execution management.

- Logs: The Logs page allows access to all task logs.

- Blueprints: The Blueprints page provides a catalog of ready-to-use flow examples.

- Documentations: The Documentations page allows access to various documentation pages.

- Settings: The Settings page allows configuring the Kestra UI.

My first kestra flow

To create a flow, go to the Flows menu and then click on the Create button at the bottom right. Now you have a textarea in which you will be able to enter the YAML description of the flow.

id: hello-world

namespace: fr.loicmathieu.example

tasks:

- id: hello

type: io.kestra.core.tasks.debugs.Echo

format: "Hello World"A flow has:

- An

idproperty that is its unique identifier within a namespace. - A

namespaceproperty, namespaces are hierarchical like directories in a file system. - A

tasksproperty which is the list of tasks to be performed at the execution of the flow.

Here we have added a single task that has three properties:

- An

idproperty that is its unique identifier within the flow. - A

typeproperty which is the name of the class that represents the task. - A

formatproperty, this is a property specific to Echo tasks that defines the format of the message to be logged (an Echo task is like the echo shell command).

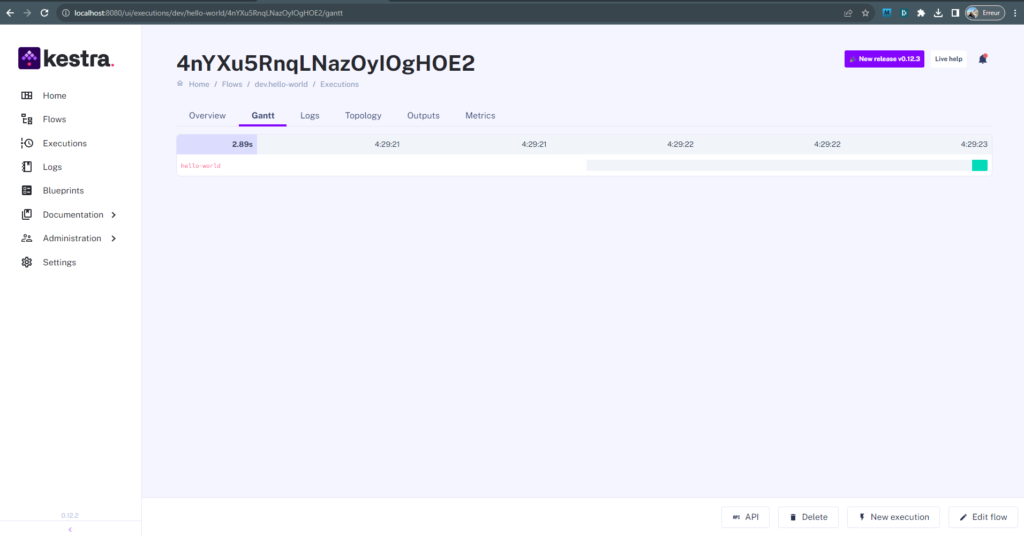

To launch this first flow, go to the Executions tab and then click the New Execution button, which will switch to the Gantt view of the flow execution which is updated in real time with the status of the execution.

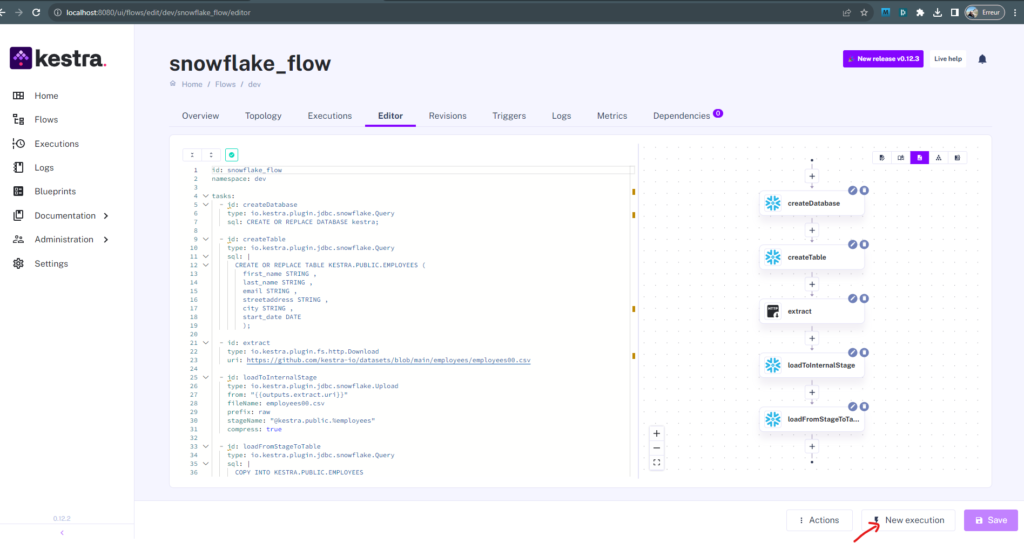

Snowflake kestra flow

Snowflake is one of the most popular cloud data warehouse technologies.

Kestra can query the Snowflake server using this task to insert, update, and delete data. The Query task offers numerous properties, including auto-committing SQL statements, different fetching operations, specifying access-control roles, and storing fetch results. When the storevalue is true, Kestra allows storage of large results as an output of the Query task.

id: snowflake_flow

namespace: dev

tasks:

- id: createDatabase

type: io.kestra.plugin.jdbc.snowflake.Query

sql: CREATE OR REPLACE DATABASE kestra;

- id: createTable

type: io.kestra.plugin.jdbc.snowflake.Query

sql: |

CREATE OR REPLACE TABLE KESTRA.PUBLIC.EMPLOYEES (

first_name STRING ,

last_name STRING ,

email STRING ,

streetaddress STRING ,

city STRING ,

start_date DATE

);

- id: extract

type: io.kestra.plugin.fs.http.Download

uri: https://github.com/kestra-io/datasets/blob/main/employees/employees00.csv

- id: loadToInternalStage

type: io.kestra.plugin.jdbc.snowflake.Upload

from: "{{outputs.extract.uri}}"

fileName: employees00.csv

prefix: raw

stageName: "@kestra.public.%employees"

compress: true

- id: loadFromStageToTable

type: io.kestra.plugin.jdbc.snowflake.Query

sql: |

COPY INTO KESTRA.PUBLIC.EMPLOYEES

FROM @kestra.public.%employees

FILE_FORMAT = (type = csv field_optionally_enclosed_by='"' skip_header = 1)

PATTERN = '.*employees0[0-9].csv.gz'

ON_ERROR = 'skip_file';

taskDefaults:

- type: io.kestra.plugin.jdbc.snowflake.Query

values:

url: jdbc:snowflake://account.snowflakecomputing.com

username: usename

password: password

warehouse: COMPUTE_WH

- type: io.kestra.plugin.jdbc.snowflake.Upload

values:

url: jdbc:snowflake://account.snowflakecomputing.com

username: username

password: password

warehouse: COMPUTE_WH

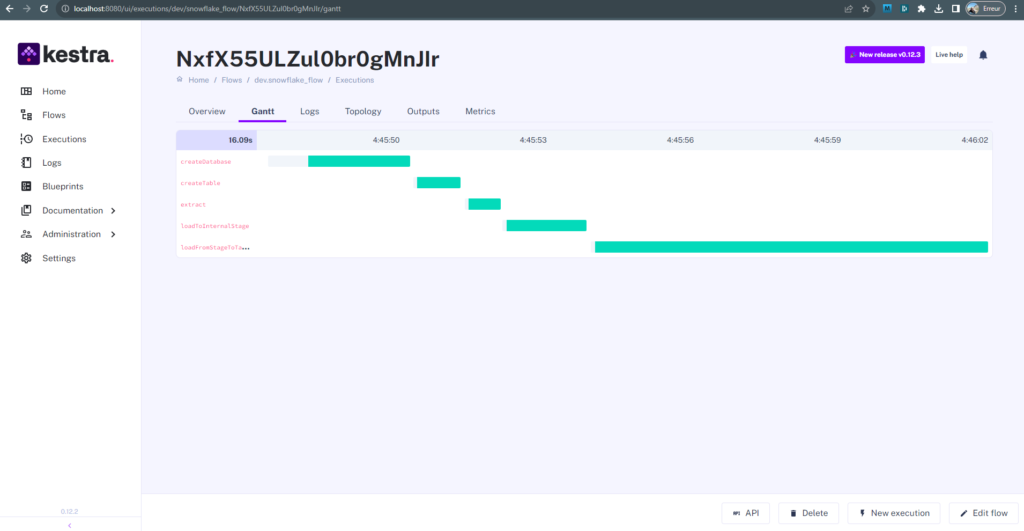

Let’s run this Snowflake flow, go to the Executions tab and then click the New Execution button. which will switch to the Gantt view of the flow execution which is updated in real time with the status of the execution.

The Logs tab allows you to see the runtime logs.

Conclusion

In this introductory article, we have seen the main concepts of Kestra and some flow examples.

To go further, you can check out the online documentation as well as the plugin list.

Ketra is an open source community project available on GitHub, feel free to:

- Put a star or open an issue on its repository.

- Follow Kestra on Twitter or Linkedin.

- Contact the team on Slack